We don’t expect new customers, marketers, and CRO specialists to know how to communicate with A/B test developers, especially if it’s your first time trying web optimization experiments. When you outsource A/B test developers for web optimization, the most important thing is conveying your ideas to the team. You want to do so efficiently, but you also don’t want to skip any details that could lead to a time-consuming, back-and-forth, question-and-answer mess.

As of now, we’ve already deployed 5000+ experiments. Because of this, we’ve made this checklist after exploring the communication gaps we faced during our initial years, which have helped us shape this checklist. At Brillmark, we encourage our clients to fill in a premade form with all necessary information regarding the experiment, which is an A/B test campaign template version of this list. This helps prevent testing problems from a lack of information.

This article is for websites and CRO experts who are planning to hire A/B test developers to build and run test ideas on their sites. It can be used as an A/B testing plan template, too. Even if you already have a team, this article will still be helpful in the form of an A/B test development checklist.

Basic Necessities for Communication With A/B Test Developers

Have a Project Management and Communication Platform

Before you can exchange information, you need a platform where you can use an A/B test tracking template. This should be a space where you can:

- Share the experiment idea, hypothesis, details, etc. for us to start building the experiment.

- Track the experiments and their tasks.

- Discuss the task together.

- Exchange necessary links and files.

- Resolve and fix any issues, bugs, errors, etc.

- Store the previous or current experiment to fall back on whenever needed.

Examples: Slack, Trello, Wrike, email, or any similar platform you’re familiar with.

Below is the format we use to exchange experiment details with our clients, with a brief explanation and example for each heading mentioned. It consists of all the details we need to start building the test.This is where conversion rate optimization efforts take shape and are validated as fruitful ideas. If you already use a format of your own, you can compare it with the following for additional ideas.

Mandatory Details You Need to Share With A/B test developers

When you create an A/B test campaign, you can’t just send your idea through text and expect it to take form. To initiate building, any developer will need a few necessary details, including access to your A/B testing tool, links of the pages that are being tested, what audience you want to target, a detailed explanation regarding the particular change being made, how many variations, etc.

You can also point out if you need any additional changes during the building process, want to see a preview link, also want to see the test after it’s made live on the site, etc. For a smooth process, communication is key.

Below is the list of details you should mention in your A/B test experiment campaign message.

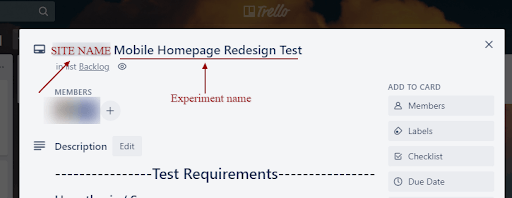

Note: The following uses examples from Trello, as the majority of our clients use it, but the format of the information would be the same on any other project management and communication platform.

1. Experiment Name

The client needs to specify the test name, which is to help both us and you differentiate that particular test. This will be used as the experiment name in the testing tool, too.

The name you provide us also will be used as the title of that experiment or task, in whatever medium we are communicating and exchanging info in.

For example, suppose you use the tool Optimizely, and we are communicating via Trello cards. You are redesigning the homepage for the mobile site and name it “Mobile homepage redesign test.” Then the test name in Optimizely will be “Mobile homepage redesign test,” and the title of the Trello card will be the same too.

This helps us find the tasks and the experiment easily.

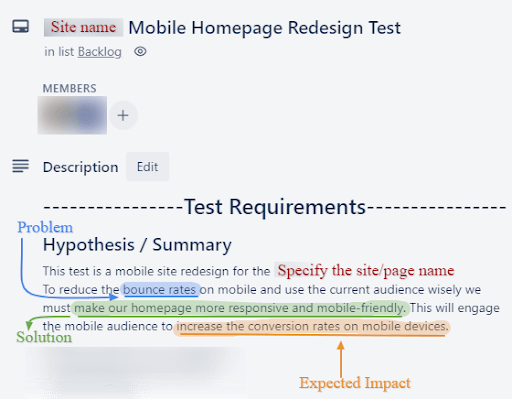

2. Hypothesis

You’ll need to provide a hypothesis to us so we know what you want to validate through the experiment. To form a good hypothesis, use a three-part approach:

- Problem > Solution > Result expectations.

This should state why you want to change, what you want to change through the experiment, and what impact you expect from it. Start with the problem, provide the change you think is a solution to it, and explain what you think will happen once that is implemented.

For the result, describe the relationship being tested with the experiment and what you’re trying to learn. Write out the hypothesis in the standard format:

Example: “If X is related to Y, then we predict this result.”

This will help us understand your requirements and the task better.

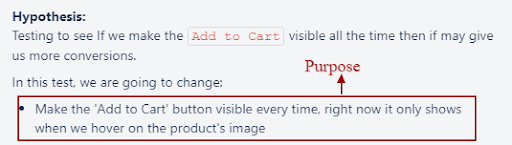

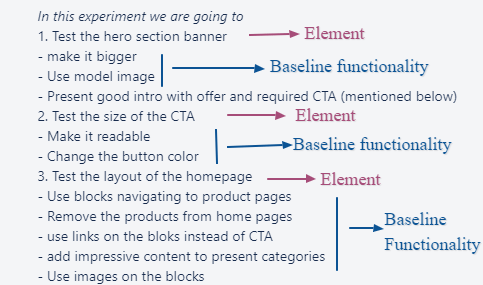

3. Experiment Details

Document all the details about the test to help us understand the context.

Help us make your idea into reality. Provide us with specific details you want to be included in the changes we’ll make in the element, page, or section for the experiment. The more specific details you provide, the more we’ll be able to design and code it. This section can include all the information we need to know to understand the task better.

Things to consider:

- Purpose of the test:

-

- Apart from the hypothesis, the purpose here means,”What do you want the experiment to do?” For example: In an experiment with a CTA change, what do you want the CTA to ultimately do in terms of functionality? Be specific here. Consider it a value proposition for the experiment.

A defined purpose helps us keep it in mind while designing the experiment.

- Description of the baseline functionality:

- If the change is functionality based, define the baseline functionality for us to understand what the element is supposed to do in the changed version. For example: On the homepage, you want to change the standard hero banner into a three-fold slider banner. Then specify if you want it to be a manual slider, or an auto slider, or both. What are the time intervals for the images to slide? Which links are attached to each slider? Are there CTAs or not? Etc. Provide us with details for the functionality so we can make it work the way you want it to work.

Note: You can also include the following details within this section, but they’re optional. Or you can mention them separately under a dedicated heading.

- Element(s) of the pages changing in the variations:

- Even if it is a single element, multiple elements, or a whole page, specify the elements which are going to be changed in the variation.

- Goals being measured:

- What goals do you want to track in analytics? Is it page views, clicks, leads, form fillings, checkouts, scroll, time spent, exit time, or something else? By specifying these, it will be easier for you to analyze the results easily. We’ll put those goals up for tracking in the testing tool, as well as in Google Analytics if necessary.

- Technical considerations:

- If there is any specific technical consideration we should be aware of, specify so in the details. That way, we won’t get stuck in the middle of the test. This will save time, and the experiment will run smoothly, too.

- Which pages are being tested.

- In this part, you provide links to the specific pages you want us to run the experiments on so we don’t miss out on any page while running multivariate testing. Specify this even if it is a single page, as we need to know which one to get started on.

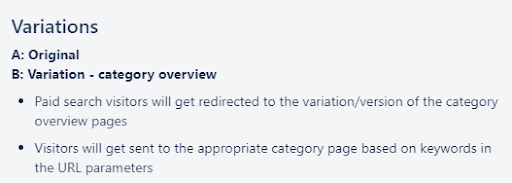

4. Variations

Please provide a summary of each variation that should be created in the experiment. Specify how many variations you want, including the original, and explain the summary requirements for how each version will look. This makes it easier for us to understand your needs and build without any confusion.

The variation summary differs as per the type of test it is going to be.

- Do you want the original version to consist of any changes, or not?

- How many variations are there going to be?

Example:

- Variation 1: Variation Name

- Variation Summary

- Variation 2: Variation Name

- Variation Summary

That way we can differentiate between the numerous variations. We will prioritize said variation with a specified summary.

5. Functional Requirements

Please provide details about how the variation(s) features should work. Functional requirements could be as detailed as you want to customize the experiment.

- Explain what change each variation will represent.

- Include links and images of the mockup (if any) for the devices you want to run the experiment on.

- Explain how the element being optimized is supposed to work after the change is applied.

6. Design Assets

If you have any mockups, wireframes, or examples regarding the desired design that can give us an exact idea of how you want the variation to look, leave those details here.

Please provide all the design assets (e.g., Photoshopped document) for the experiment. If assets are variation specific, please note which assets are associated with specific variations.

7. Tool Settings (If Any)

Mention the A/B testing tools you use to build the tests on your site, and specify if they have any special settings which could affect our building process in any way. You can also instruct us to use any specific feature or tool setting suitable for the test. If you don’t have one yet, you can get started with Google Optimize for free before deciding to go for a paid tool.

Examples:

- Optimizely.

- Adobe Target.

- VWO.

- Google Optimize.

8. URL Targeting

This is the most important detail we need from you to get started. URL targeting can include the most intricate details to look at. If they’re not correct, it could mess up the experiment’s results.

- Specify the link to the page that is going to be tested so we can trigger the changes on it to create the ultimate A/B test experiment.

- There could be more than one link depending on the type of test, whichever the case may be.

- Specify the links to the pages, with the name of the pages, if multiple.

- Even if it is a sitewide change, let us know if there are any exception pages.

- This is to make sure the rest of your site won’t be affected by the experiment, and it’s mandatory for calculating the right results in the end.

- Provide the URL of all the pages that will be included in this experiment.

- If the experiment targets multiple URLs, please provide a “default” URL (which will be used for preview links).

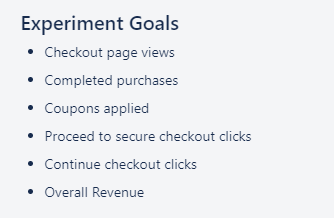

9. Experiment Goals

These are simply the “actions” of the user that you want to keep an eye on, to know whether the version is achieving what you expected or not. Experiment goals in an A/B test work as an eye to lead experiment results toward recognition of the winner.

- Goals can either be set by you or you can let us know what goals you want us to set for you. That goal would then be tracked via the A/B testing tool in which the test is running.

- Goals for the experiment will be set up in Optimizely. If possible, please specify if these are existing goals in your Optimizely project or if we should create new ones.

Types of goals include:

- Click goals.

- Pageview goals.

- Revenue goal.

- Custom JavaScript goals.

For example: On a checkout page experiment, you want us to create the goals, including checkout page clicks, form fills, exits, etc.

Note: The revenue and custom JavaScript goals will require your developers to add JavaScript to the page(s).

10. Audience Targeting

Audience targeting means exposing the experiment to a specific audience based on whatever criteria seem most suitable. It isn’t mandatory, but it depends on the test. If there is a need to target a specific audience, let us know on what criteria you want to target. We’ll set those criteria in the tool with the help of whatever analytics tool you’re already using.

Providing the audience targeting in A/B test requirements defines which visitors are included in the test. It also specifies how the visitors will be randomized in between original and variations. If possible, please specify if an existing audience should be used or a new one should be created. These can be default Optimizely audiences or custom audiences. Default audiences include:

- Browser or device.

- Location (geo targeting).

- Ad campaign.

- Cookie.

- Query parameter.

- New or returning session.

- Traffic source.

- Time of day.

For example: If you only want to include organic traffic in the experiment, then all the traffic coming from social media, affiliate links, and backlinks won’t see the variations, as they are not included in the audience targeting. Also, if you only want it to run on the desktop version, in the audience targeting section, you’ll specify:

- Organic traffic on desktop only:

11. Traffic Allocation

Traffic allocation is the percentage of the audience you want to have divided randomly between the original and the variations.

Provide traffic allocation details. If not specified, we’ll use the default (100% allocation split evenly between baseline and variations):

- Total traffic that should see the test (100%, 50%, 25% etc.).

- Of the total traffic, how much traffic should be allocated to each variation on a percentage basis (must add up to 100%).

- For test validity purposes, equal traffic should be allocated to each variation in an experiment to ensure we are not skewing the results.

For example: For a test in which you only have the original and one variation, you want half of the audience coming to the experiment page to see the original version, and the other half to fall in the variation bucket and see the changes.

Then the traffic allocation you will provide would be:

Conclusion

These are all the details an A/B test campaign message will need. Your A/B test developer will ask you for any additional information as per the requirement of a particular experiment. This is to ensure there is no friction while building the test.

Our clients use this format to communicate with our team of A/B test developers while creating an experiment task. That way we don’t assume anything, and everything goes per the client’s instruction. This allows us to mirror the client’s vision in the experiment.

If this sparks your interest in you, to get started with A/B testing, please contact us.